I joined Discord's Safety team in Summer 2021, just as the team was undergoing rapid growth. The team was small, under 20 people across all functions, and we were aggressively hiring engineers and data scientists. Many people on the existing team had not worked on a safety team before, but had a passion for the space. We needed to be nimble to be able to react to new threats as they arose. Our general philosophy during this time was to start working on low hanging fruit before to gain some easy wins before moving into bigger and more complex initiatives that would span months. This approach would build our velocity of shipping, give employees time to learn the product space, and would give us time to hire before focusing on larger initiatives.

Discord’s largest safety problem around this time was spam and specifically phishing via Direct Messages (DMs). Bad actors would send malicious links disguised as a free game or impersonating Discord staff to steal accounts to then send more spam. You could gain access to a user's account and the user may not know because they continued to have access themselves. A parallel threat was crypto spam. Discord was a well known gathering place for crypto enthusiasts. Instead of getting their accounts stolen, people were getting their crypto stolen by getting scammed in their DMs.

All planning and vetting happened with the Product Manager and Engineering Manager. The Engineering Manager would assign engineers to build each feature based on their availability and what made sense staffing wise. We also collaborated with UX writing, data science, legal, public policy, illustration, marketing, and social.

DM Gating

The first thing we needed to do was begin collecting data on our spam problem by asking users directly. We wanted to gather enough data to distinguish between friend and foe and eventually action on these bad actors automatically without intervention from users.

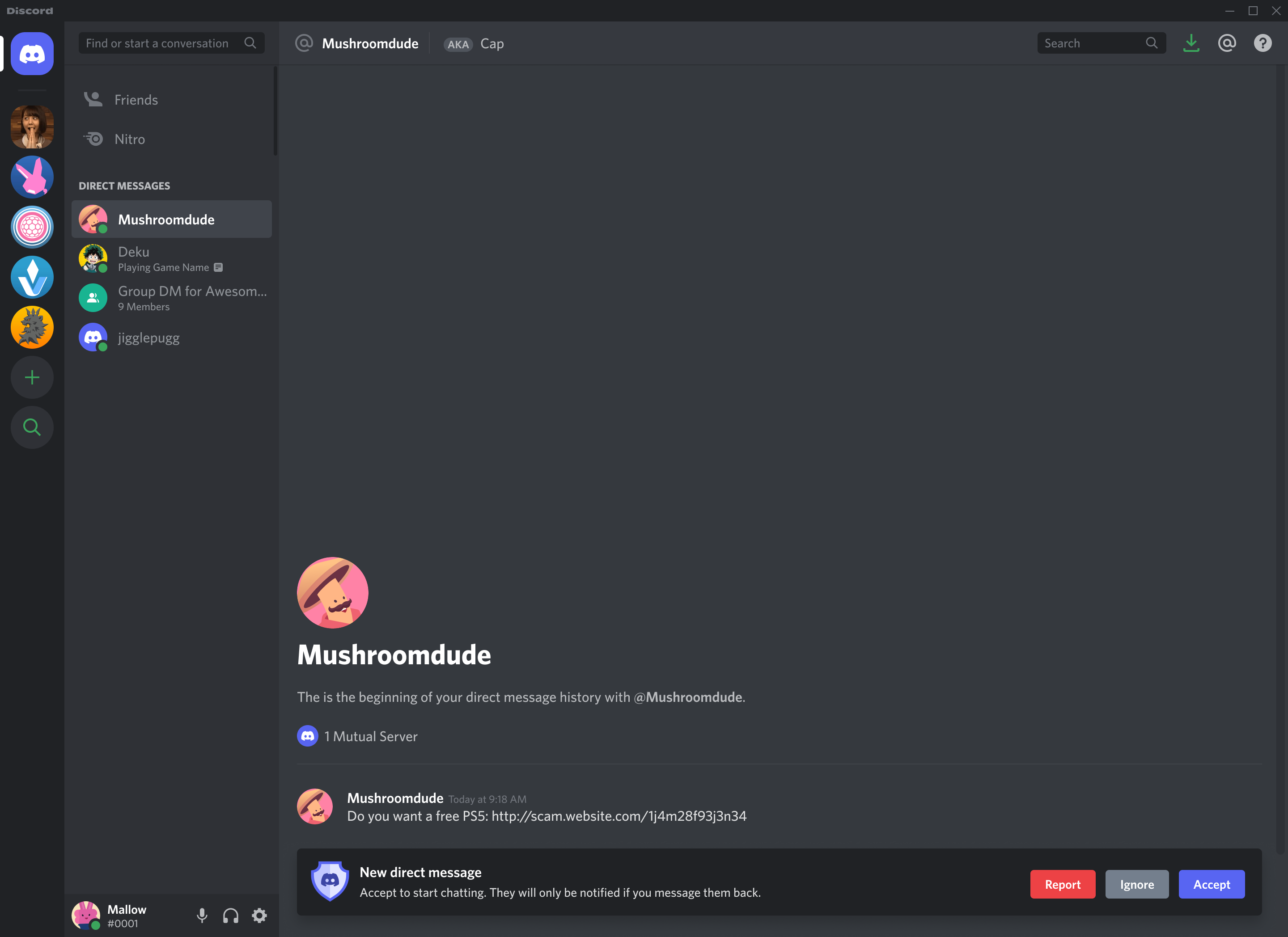

We started by adding an action bar to the bottom of the DM window when you received a message from a new person. The recipient could Report the user, Ignore the message (This would also remove the message from their DMs), or accept the message in the case that it was not a spammer. We made all URLs in the message plain text until the recipient clicked Accept. We also removed the “Add Friend” and “Block” buttons from the message UI to put further focus on the action bar.

DM Gating 2.0

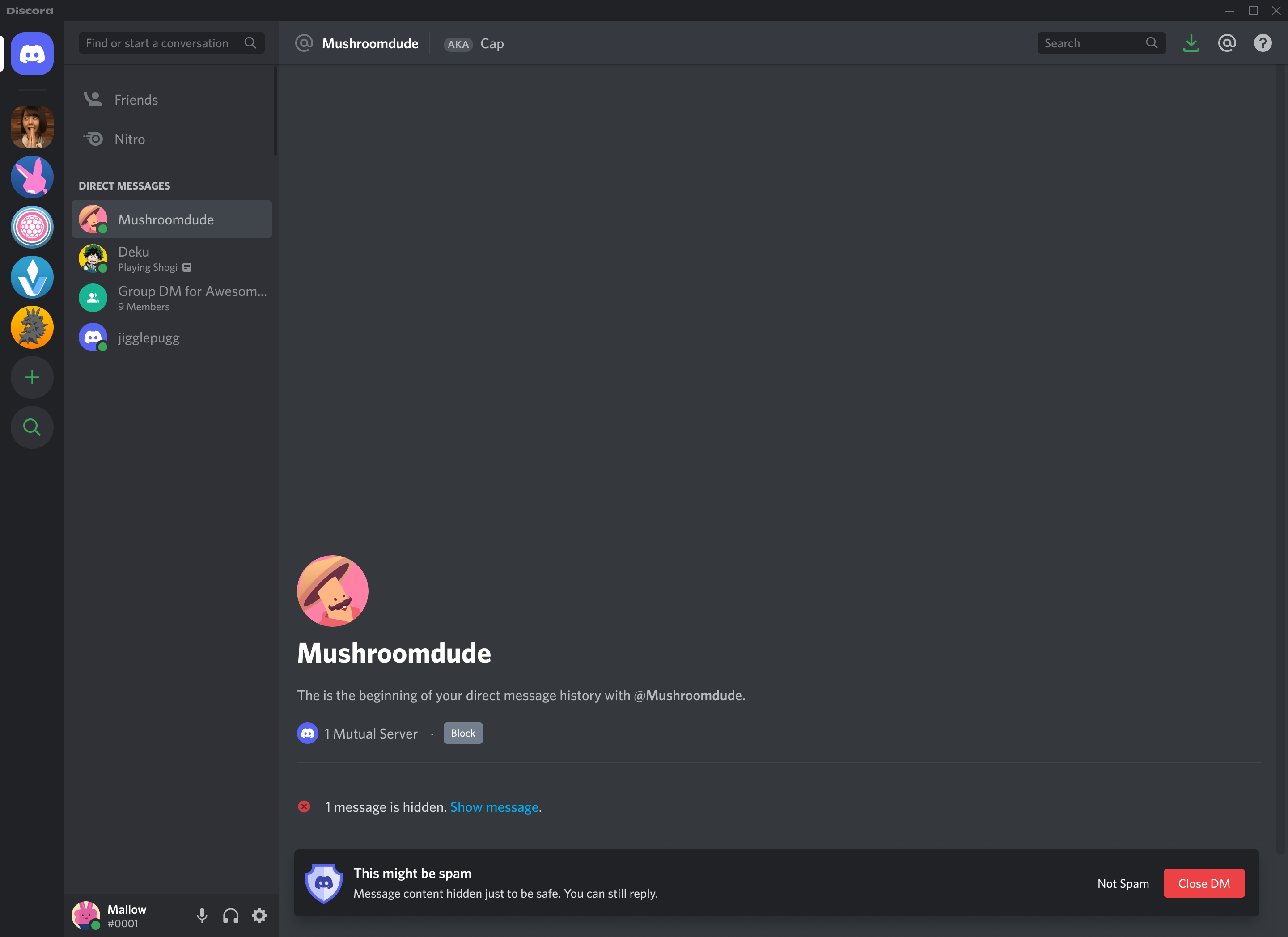

After we had gathered enough data to begin to reliably mark users as spammers, we began hiding the bad actor’s DM content behind a click through interstitial. We wanted users to feel that we were being transparent, and thought it was important that they could “check our work.”

If the user clicked the “Not Spam” button the DM would transform back into the usual DM UI. On the back-end we’d mark this as welcomed message. If the user clicked “Close DM” or “Block” we took that as an unwelcome message and we marked the user as a spammer. These friend or foe signals were scored in aggregate and not based off of one person’s decision.

By launching DM gating, we had increased our ability to detect bad actors by 1000%.

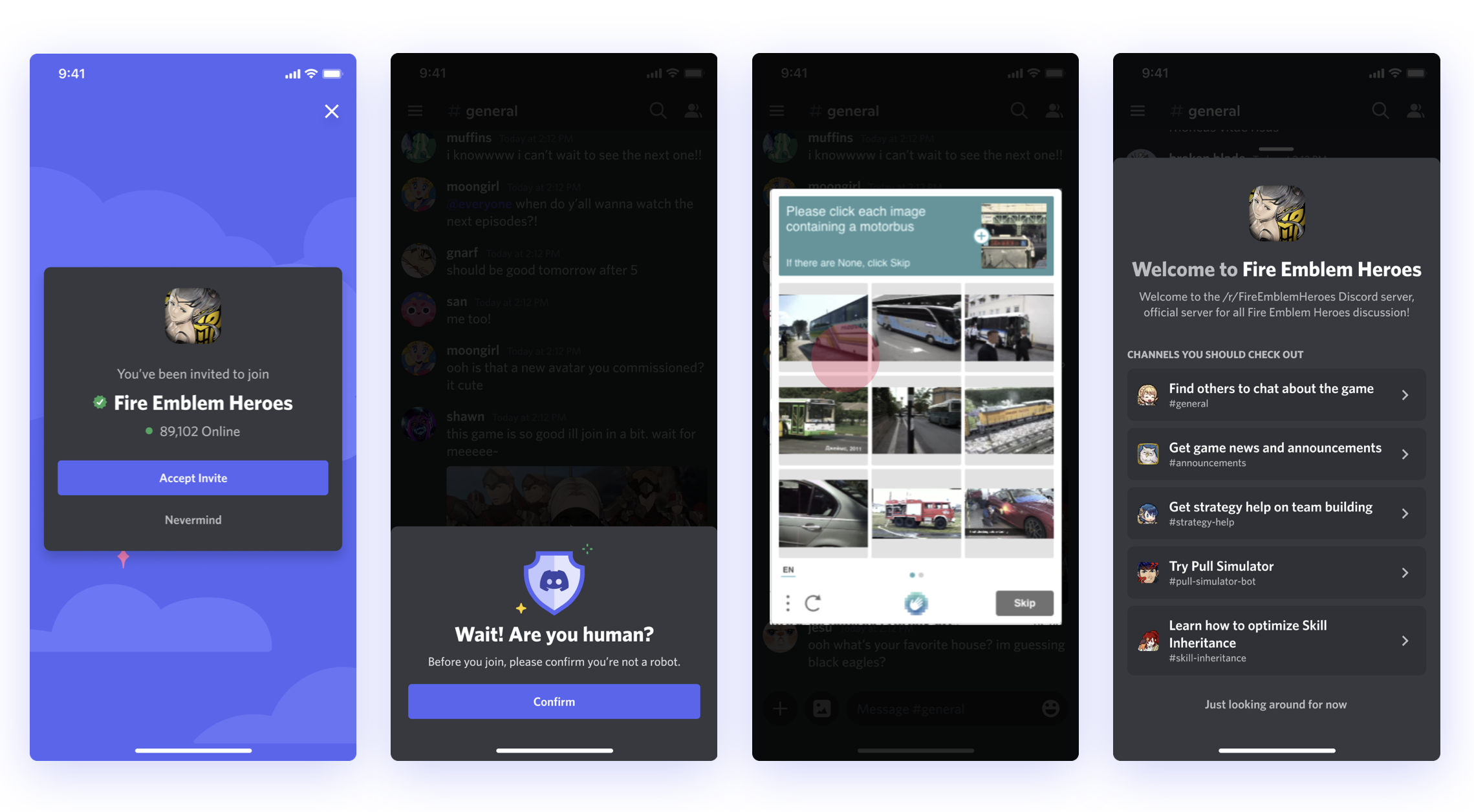

Adding captchas

Another mitigation we took was to slow the spammers down before they could send those initial messages. A common tactic used by these spammers was to build bots that joined many different Discord servers as possible and mass send DMs to everyone in that server. If we detected atypical behavior, such as joining too many servers in a short time, we would throw these captchas. If the spammers were using scripts to join and message people, the script couldn’t solve the captcha, essentially stopping the script until somebody noticed. If it was a human, it was just annoying. We worked to make these as friendly as possible for regular folks that got caught up by them.

We didn't expect this to stop bad actors, but be a speed bump to slow them down.

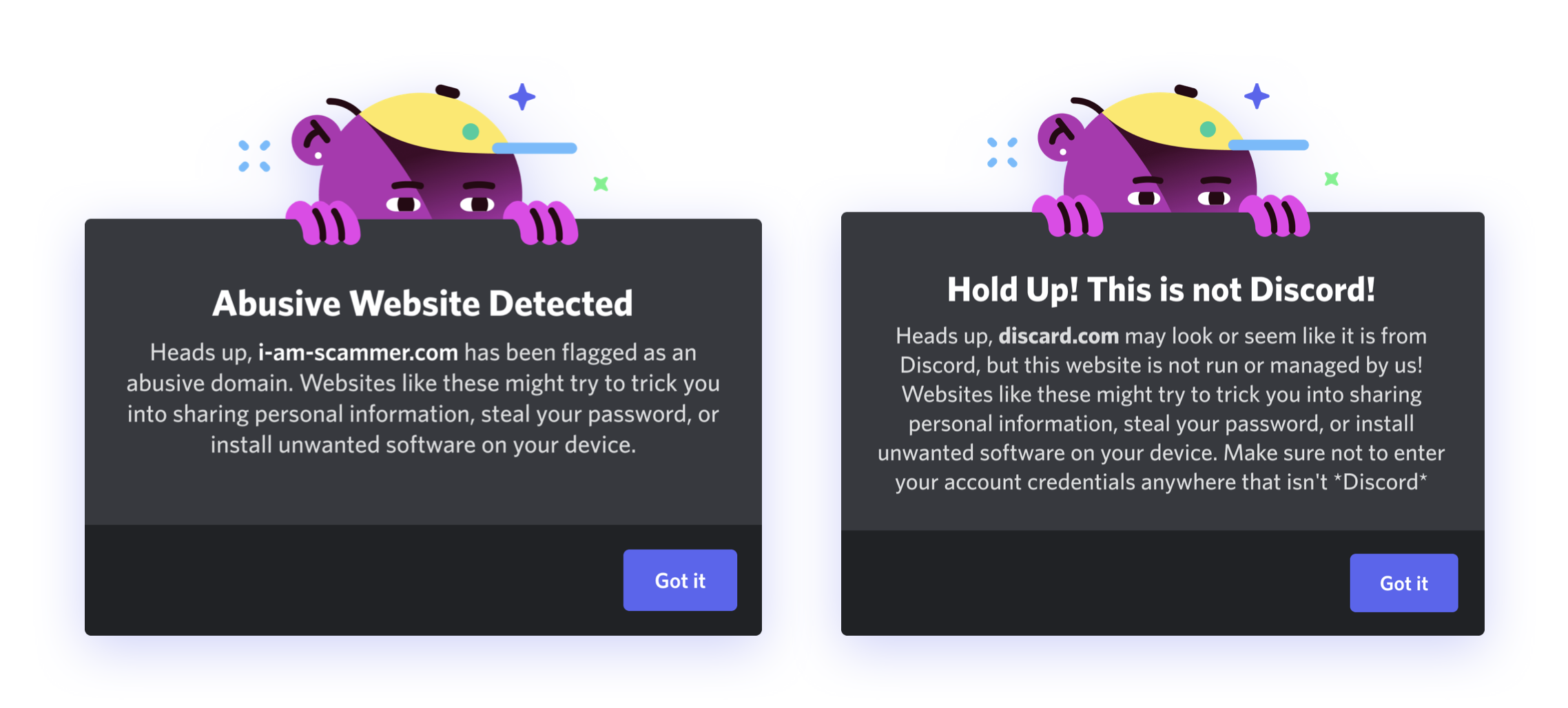

Educating users

On top of in-the-moment remediation and slowing spammers down, we wanted to educate users on spam and how spammers can deceive users on Discord. We launched a suite of modals that helped users understand the dangers of clicking on deceptive URLs or downloading dangerous .EXE files that could compromise their computer.

In addition to these features I’ve talked about today, we also made improvements to Two Factor Authentication, increased security around changing your account information, improved the reporting flow, and added a read-only mode for suspicious accounts.

These features were launched in 4-6 week sprints to experiment then broad release over the Summer and Fall of 2021.

It’s always hard to accurately measure something like “people see less spam.” We chose incoming spam reports as our proxy for this, and I’m happy to report that these features halved our incoming spam reports from users over the course of the 2021 calendar year.